*Our firm’s AI Market Commentary dated as of 8/19/2023 has been updated with an important footnote because, without additional clarifying information, it can be interpreted as stating that we are using AI more extensively and more directly for investment selection than we intended to represent. Our AI Market Commentary previously stated that, “We are also using AI to help generate more complete models and help with investment selection. For example, The Argos AlphaESG IndexTM that we created is built on both fundamental and AI methods.” Dylan Minor developed the AlphaESG IndexTM based on combining conventional financial metrics and metrics generated by an AI model he beta-tested and helped further develop with another professor at George Washington University. However, the final implemented version of the index currently uses AI-generated signals from an unaffiliated third-party entity AI model. Beyond this index, which many Omega Financial Group clients invest in through a BNP Paribas offering, Omega Financial Group does not currently use AI-based models to assist in investment selection. As far as wealth management decisions beyond investing, Dr. Minor is currently testing some AI-based models to help potentially create better strategic wealth management models. Finally, Dr. Minor is also analyzing Omega Financial Group’s current technical tools to understand their reliance on AI-based models, as well as their robustness against some of the problems that can arise in AI-based models.

“The 9000 series is the most reliable computer ever made. No 9000 computer has ever made a mistake or distorted information. We are all, by any practical definition of the words, foolproof and incapable of error.”

Hal, the supercomputer, in the movie 2001: A Space Odyssey

It seems it is difficult to make it through the day without hearing, seeing, or discussing something about artificial intelligence. But is it really such a big deal? And what really is it? Hasn’t it been around for a long time? What might it do to our economy, jobs, and financial markets? What are we doing about it? These are the questions we’ll attempt to answer in this commentary.

What really is AI?

Broadly, AI is a catch-all term for technology that attempts to mimic human intelligence. The term Artificial in AI is an apropos modifier, as today, machines are far from what we would generally classify as true intelligence. Some people dream (or have nightmares) over the notion of general AI, which would be capable of matching and exceeding human intelligence. However, most experts agree this day is far away, and some say it may never arrive.

Artificial intelligence has been around for quite some time. I’ll provide just three more modern examples of AI applications. Earlier uses of artificial intelligence include creating the ability of a human to speak directly with a machine, so called voice commands. For example, we have all experienced speaking with SIRI on our Apple products, Alexa on Amazon, or Google Assistant on Google products. The accuracy of these tools has improved tremendously over the past decade. Other uses include autocomplete, where when we are writing a text or typing an email, our machine will attempt to finish our sentences for us. And yet a third example is generative chat, which has been most popularly manifested in ChatGPT. With this offering, through natural text prompts we can ask a machine to complete various tasks: from providing us an itinerary to vacation in some obscure location to writing a poem for our partner, from writing computer code to crafting an “original” story. The technology can similarly create new images and sounds, based on natural prompts.

Common to all three of these examples is the notion of artificial intelligence as an “auto completer.” For the case of ChatGPT, the program has analyzed countless words, sentences, and paragraphs to understand when someone wants to know something, what a “correct” response is. Of course, there are multiple correct responses, and so ChatGPT will provide a different answer each time it is asked. It also does this intentionally by randomly choosing similar responses to “sound more like a conversation.”

What the program is actually doing is converting your prompt into numbers so it can map probabilistically what collection of words appropriately relate to answering your prompt. The more descriptive the prompt, the more likely the response will be correct, assuming it has a correct response.

The Economist Magazine published an excellent article explaining the inner-workings of generative chat, which can be found here. For those not interested in the fine details or are not generally given over to engineering-like discussions, feel free to skip to the next section.

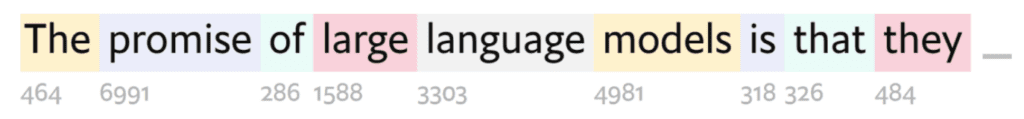

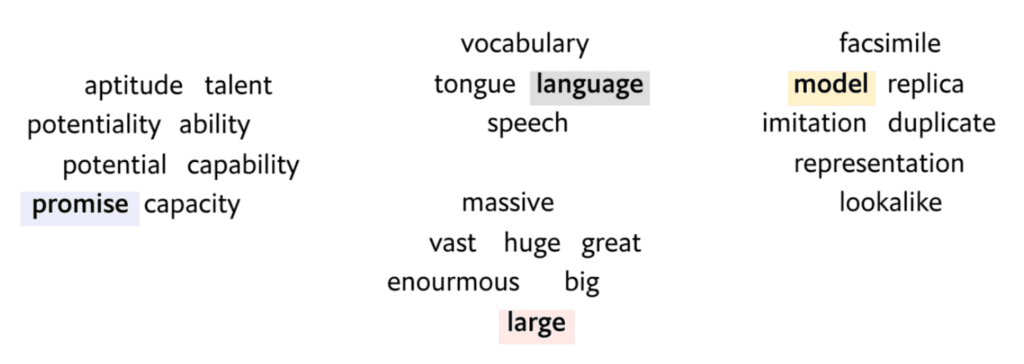

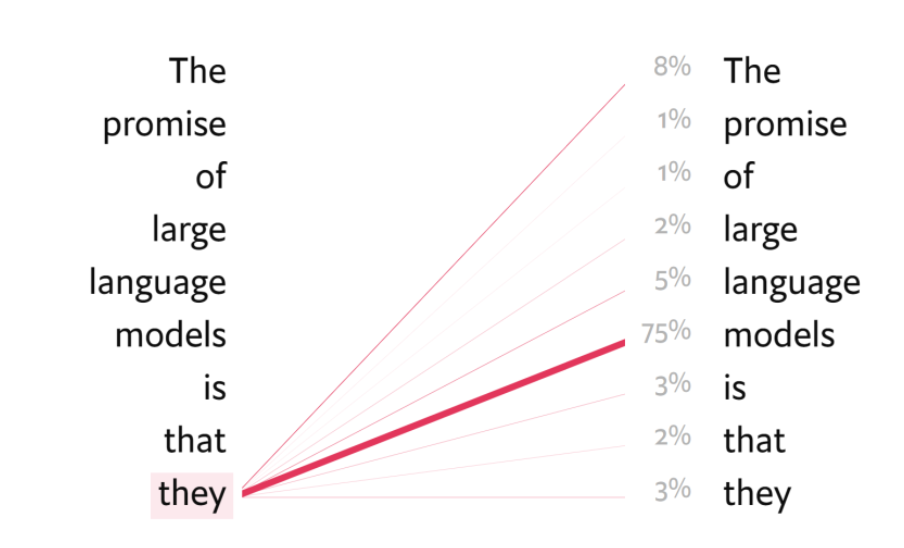

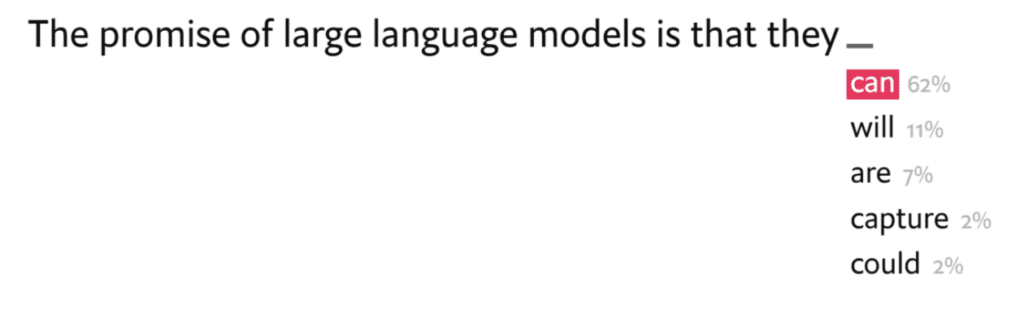

The article outlines four steps the program takes to learn how to chat: Tokenisation, Embedding, Attention, and Completion. The images below are from the article. The article assumes the example of a machine learning how to build out the sentence “The promise of large language models is that they ______”. What should the next word be? Here is how that learning process works through four steps:

- Tokenisation: convert words to numbers

2. Embedding: place words being analyzed closer to other words that have similar meanings

3. Attention: weight words by how important they are in forming connections between words

4. Completion: assign a series of probabilities to the likelihood a word should appear next. Repeat the process until complete.

To get to this capability of “autocompletion,” the model is trained on billions of words from human-created examples of writing. For example, ChatGPT3 was trained mostly on data available on the internet from 2016 through 2019. The program practices on the data and continues to do so until it gets better and better at providing responses that are consistent with what actually was written in its learning-corpus (i.e., all of the text used to learn how to form responses). Thus, when learning first begins, the machine would speak nothing but gibberish, if asked for a response. However, later it can reach the point of providing outputs that sound like a human wrote them, as in the end it is essentially aggregating an enormous collection of human writings. On whole, the current version of ChaptGPT has grown to become quite good at providing believable responses. Nonetheless, that does not mean that the program actually knows what it says to you.

In the end, the program is a gigantic statistical model. Because of this, sometimes it can provide some interesting combinations of words, even innovative. However, sometimes it can also be quite wrong, as it does not actually understand what it is talking about; it only understands likely correct answers, but not why they are correct beyond being a statistically likely combination of words. This failure of AI to provide a correct answer has been coined “hallucinating.” The challenge is that you never know when the machine is hallucinating unless you already possess the expertise to judge it. For example, I asked ChatGPT to summarize some of my published research. It came back with an authoritative sounding answer that was, unfortunately, wrong. Instead of summarizing my papers it summarized some publicly available articles on the internet that were on the same subject of my papers. Many published academic papers are behind ivory towers by means of paywalls and, unfortunately, are not so easy to access. To be clear, if ChatGPT had been trained on academic papers including mine, it would have likely succeeded in its task. But it’s a great reminder of one of the fundamental laws of life: garbage in, garbage out. Or in this case, not the right stuff in→the wrong stuff out.

A further challenge in using generative chat is that it cannot generally provide sources because its statistical model is trained on myriad sources, and it doesn’t know which particular sources are creating a certain output. A fellow professor and Omega client asked ChatGPT to provide references after it provided some historical analysis. It provided references for its sources, even with page numbers. Unfortunately, none of the references existed.

I also tested ChatGPT to write some programming code for a Monte Carlo simulation: rather than only using off the shelf Monte Carlo financial planning software at Omega, we often encounter a more complicated situation that benefits from custom programming that we do in academia. ChatGPT very quickly provided me a lot of code. However, there were some critical errors that didn’t allow it to work correctly. I was able to fix those since I’m familiar with the language. And to be fair the programming language (STATA) I tasked it with is not as common as others such as Python that have voluminous publicly available code samples and advice on the internet. In its current form, I’ve determined that ChatGPT does not materially improve my productivity in programming custom Monte Carlo simulations. Nonetheless, I think it is likely that can change in the future. And it certainly saves some time for programmers using more commonly used languages. As one Omega client, who is also an IT expert, shared with me additional AI benefits can include creating reports more quickly, speeding up website updates, and generating interview questions, to name just a few. Of course, as our client also pointed out, just as AI can help good people do more good, it can also help bad people be more productive in doing more bad: AI can be used to more readily impersonate other people, write phishing emails, and create malicious code. AI can also provide biased results and violate copyright law, based on what it is trained on.

At this point you might be wondering that while there are some potential benefits, is it really worth all the noise and excitement right now? Has a revolution really begun? Quite possibly.

How come people think this AI stuff is so valuable?

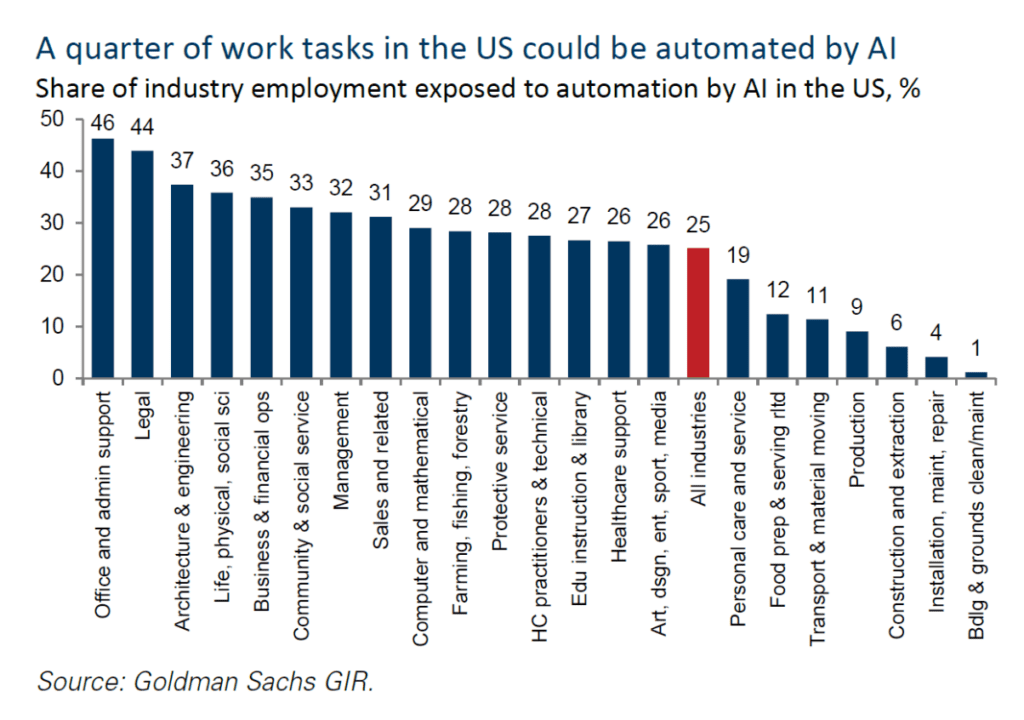

To the extent workers can use generative chat to be more productive, it could have a significant impact on profitability and even economic growth. This is no different from observing productivity booms from the telephone, automobile, personal computer, and the internet. Of course, different kinds of jobs will have greater opportunity for productivity improvement from generative chat and AI more broadly. The below chart shows the potential impact of AI on jobs by noting what fraction of tasks could ultimately be replaced by AI for various fields:

This chart suggests about 25% of all jobs in the US could potentially be automated. Legal services and administrative support have the most tasks at risk of being taken over by AI. As these tasks are replaced, some workers can engage in more productive tasks. And others will simply no longer have a job. Accounting for that fact, the above data suggests about 7% of current jobs could be eliminated by AI.

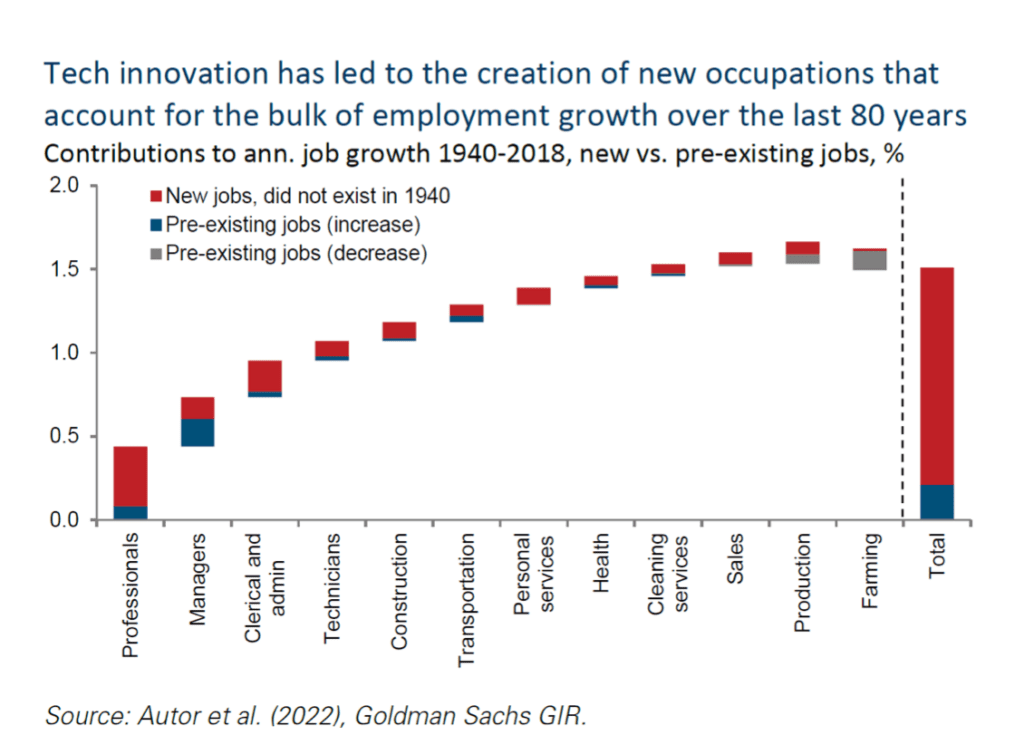

This would not be the first time jobs have been eliminated by technology. The good news is many new jobs are created after old ones are eliminated. In fact, it is estimated by Professor Autor from MIT that most all of the growth in positions since 1940 occurred by creating new jobs that did not even exist at that time. I recently returned from the National Bureau of Economic Research (NBER)1 meetings in Boston where we explored several other recent research projects that discovered AI has been actually improving employment.

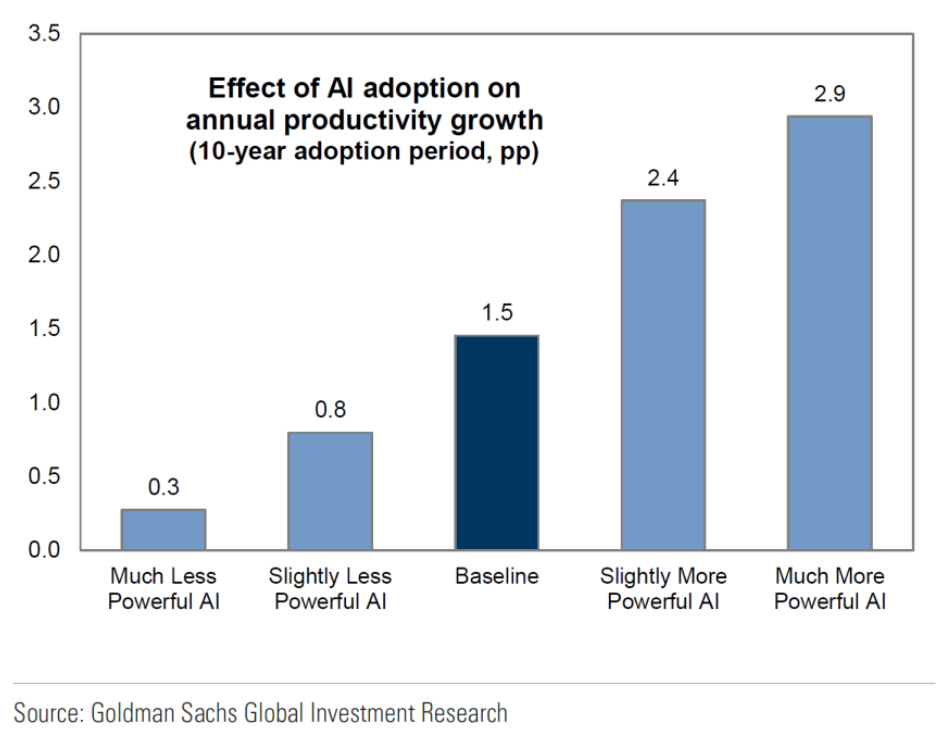

Being able to estimate how AI can impact employment allows us to then estimate its effect on a company’s productivity and thus profitability, as well as the overall economy’s potential growth. In terms of AI induced productivity potential, Goldman Sachs estimates the baseline scenario as AI adding 1.5% per annum to productivity. The below graph shows each of their scenario estimates.

It must be stressed, however, that these kinds of estimates are far from certain, as many assumptions need to be met to realize a particular outcome. Nevertheless, it is clear, that if AI adoption is successful, it will have a material impact on employment.

Implicit in the above productivity gains is the reduction of the workforce; fewer workers to accomplish the same output means more profits and more economic growth. This is why many people have become concerned about the rise of AI. The hope, however, is to retool workers that lose jobs to AI for other jobs. To be sure, not all new jobs are better, but on average the quality of living has still risen over time through economic growth over the past centuries as various technologies have ultimately eliminated jobs but also improved our well-being (e.g., think dishwashers, washing machines, and healthcare).

In addition to improving economic growth and wellbeing, technology can cause a greater valuation of the overall stock market, as companies become more profitable. Indeed, Goldman Sachs estimates an increase in the value of the SP500 of 5% to 14%, simply from the potential AI adoption by companies. However, these estimates, as cautioned above, are highly uncertain.

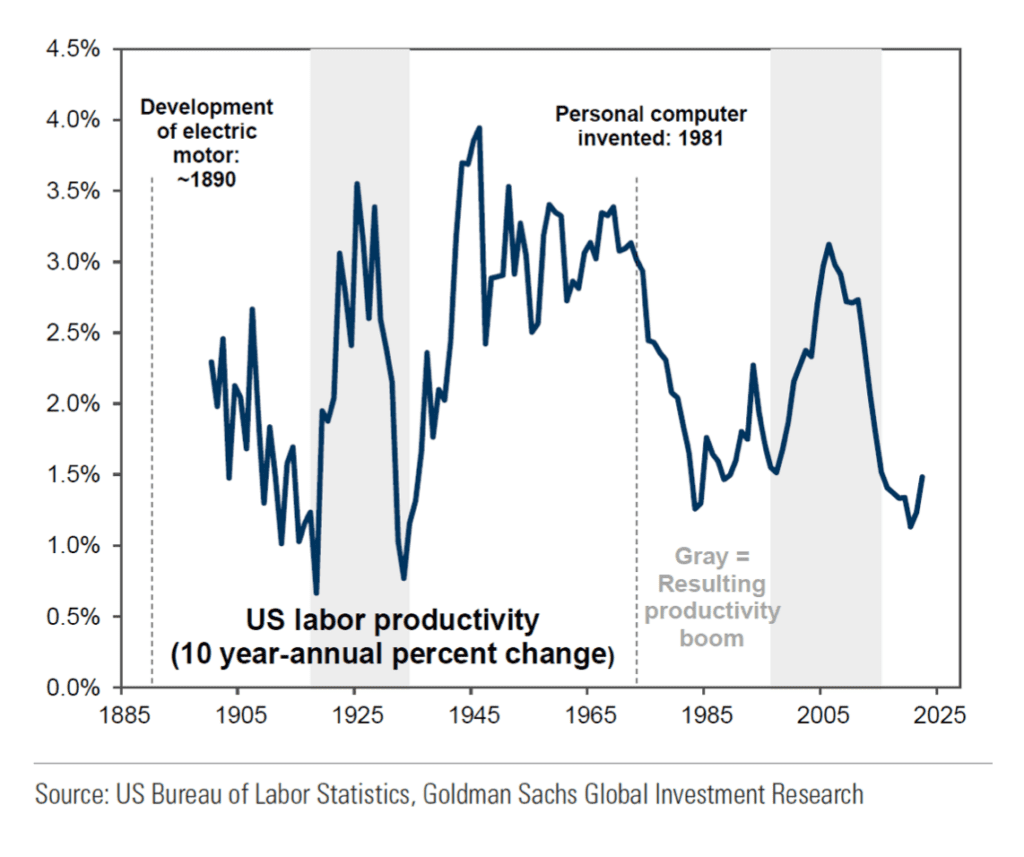

It is also important to note that technological improvements can take a (surprisingly) long time to fully result in improved productivity. Examples include the introduction of the electric motor and personal computer, both of which took about 20 years to induce observable productivity gains. The following chart illustrates this phenomenon, which is largely driven by the fact that adoption of new technologies can take a long time:

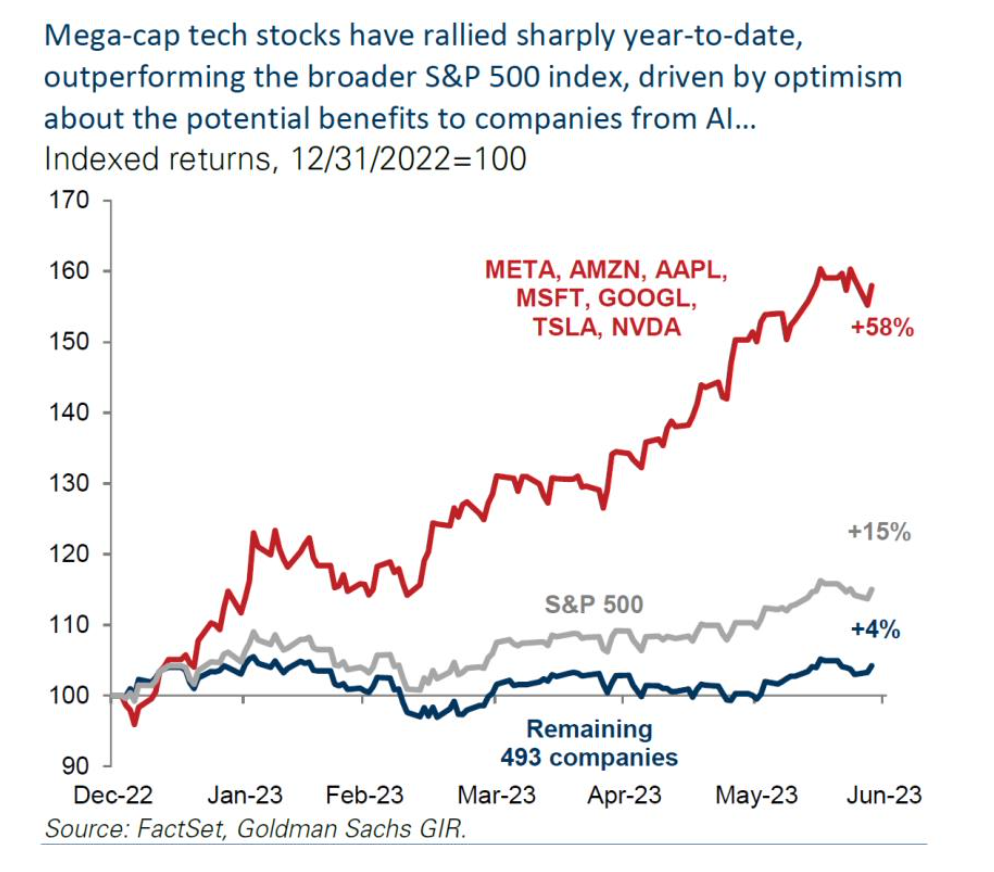

Of course, there are individual companies that stand to benefit from AI right now, regardless of how much AI ultimately improves the overall economy. These companies form the ecosystem of providers in the so-called AI gold rush. Rather than selling picks and shovels, they are selling semiconductors, servers, and cloud space. For example, AI chip provider NVIDIA has shot up a whopping 200% year-to-date from the excitement bubbling over regarding AI. The providers of servers and other hardware are also benefiting. In fact, many of the largest technology companies are benefitting from these recent developments. The likes of Microsoft, Amazon, and Google have all been deeply involved in AI and its development for quite some time. And we should not forget there are many other AI applications outside of the current generative chat excitement, for which these companies have already benefitted from. In fact, year-to-date most all of the growth in the SP500 has occurred from just 7 stocks. As can be seen below, they have had an average return of 58%. The remaining 493 stocks have averaged a normal 4% through June.

Should we be riding these 7 stocks? In small part; we have had a small overweight in these companies through holdings, such as QQQ. However, there is much more to do.

So what are we doing about it?

There are several avenues through which we’re taking advantage of the growth of AI, here at Omega. Of course, as suggested above, we are already investing in much of the AI ecosystem through our core index investments. Both large and small technology companies are represented in these investments. We have also begun exploring certain private equity investment options for those settings where a riskier investment in AI can make sense, if desired.

We are also using AI to help generate more complete models and help with investment selection. For example, The Argos AlphaESG IndexTM that we created is built on both fundamental and AI methods.

We are also having some additional fun at our office by using voice prompts to do important things like change our music playlist while still typing a client email or resolving staff arguments at lunch over whether or not UFOs exist, while still holding onto our sandwiches.

Finally, we have also been adding caution to our practice. With AI, it is easy to obtain some erroneous results, as previously outlined. As such, we are reviewing all of our tools to better understand where there are weaknesses that must be further overseen. More broadly, we are making sure we continue to have human engagement as our primary modus operandi, whether it be directing and reviewing program results or providing you a live person to speak with. We are not ready for machines to rule the world yet (or ever), but we are ready to have them help us in some smart ways so that we can provide you a real-valuable offering, with a human touch…

Omega Financial Group, LLC is a Registered Investment Adviser. This commentary is solely for informational purposes. Advisory services are only offered to clients or prospective clients where Omega Financial Group, LLC and its representatives are properly licensed or exempt from licensure. Past performance is no guarantee of future returns. Investing involves risk and possible loss of principal capital. No advice may be rendered by Omega Financial Group, LLC unless a client service agreement is in place.

- The NBER consists of a group of economists that engages in various US economic projects, from officially dating US recessions to providing influential policy advice